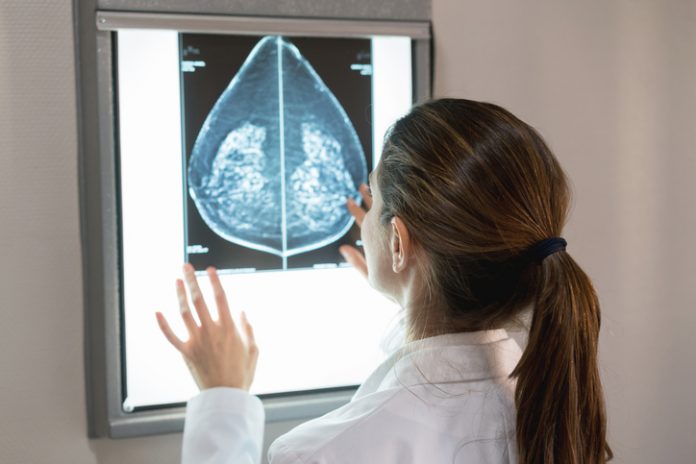

A retrospective study of screening mammograms assessed by an FDA-approved AI algorithm revealed higher rates of false positive case scores in African-American women and older women compared to White and younger patients. The same algorithm also revealed that false positive case scores were less likely in Asian patients compared with White patients.

The study of 4,855 screening mammograms included women of four general race/ethnicities: White, African-American, Asian, and Hispanic and results were published in Radiology.

“We wanted to see if an FDA-approved AI algorithm for breast cancer screening performed equally among all patients when considering characteristics such as age, breast density, and race/ethnicity,” explains first author Derek Nguyen, MD, Assistant Professor at Duke University.

The team undertook this analysis as AI algorithms for screening mammography are increasingly being adopted for use by breast imaging screening practices across the nation, primarily to flag mammograms needing further consideration for detected anomalies.

In their study, the screening mammograms were known to be cancer-free, meaning the radiologist did not detect cancer on the mammogram and that there was no evidence of breast cancer in the following two years. That screening cohort included 4855 patients (median age, 54 years) distributed relatively equally by ethnicity: 27% (1316 of 4855) White, 26% (1261 of 4855) Black, 28% (1351 of 4855) Asian, and 19% (927 of 4855) Hispanic patients.

The researchers then used an AI algorithm to screen those nearly 5,000 mammograms. Any flagged mammogram would be known to be a false positive.

“We found that the false positive rates were not equal among the patient groups; that the AI algorithm was more likely to flag an African-American patient and less likely to flag an Asian patient compared to the White patients,” says Nguyen. Specifically, false-positive case scores were significantly more likely in African-American and older patients (71–80 years) and less likely in Asian and younger patients (41–50 years) compared to White patients and patients between the ages of 51 and 60.

The team believes these results are likely due to how the AI algorithm was trained.

“Our study shows that although these AI algorithms are FDA-approved, their training datasets may not be diverse, thus not representative of the general patient population that breast imaging practices serve nationally,” Nguyen explains. Instead, the results are very dependent on the training data that was used to develop the AI algorithm, which has real implications for clinical practice.

The authors add that the FDA requires the development of AI tools before commercial use, but training on a demographically diverse population is not a required component.

“When an institution is considering or thinking about implementing an AI algorithm into their clinical workflow, they should know the actual patient populations they serve,” Nguyen recommends. For example, if most of the population they serve is predominantly White, then if the algorithm they are considering implementing was trained on majority White patients, then that could be a good fit. “But if a specific algorithm’s training dataset included fewer African-American, Asian, or Hispanic patients, and that is actually the predominant populations that they serve, then that algorithm may not be a good fit. It may over- or under-call, depending on the patient’s age or race/ethnicity.”

It sounds like a simple solution would be to see which AI algorithm was trained on the population of a given practice or institution. However, the FDA does not require AI algorithm vendors to be transparent about the patient characteristics of samples used in algorithm development and that information is not published on any of the AI algorithms’ websites.

“Vendors will state that their algorithm was trained on millions of mammograms, but they don’t specify the patient characteristic breakdown of those mammograms,” adds Nguyen, adding that the algorithm used in this study is based on deep convolutional neural networks and was trained offline using a screening population data set collected from more than 100 institutions and including more than six million training images. “That’s an impressively large number but may not represent all patient groups.”

Therefore, the team advises any organization considering implementing an AI algorithm to reach out to the vendor and specifically ask for the training dataset breakdown. For AI algorithm developers, the team also believes publicizing this information freely on their websites—or intentionally creating products tailored to ethnically diverse populations—would be a strong differentiator in an increasingly competitive AI screening marketplace.

“For many institutions, the focus related to AI algorithms is their ability to detect breast cancer,” says Nguyen. “But this difference in false positive risk is a nuance that can be improved so that the performance of the algorithm can be inclusive of all patients.”