By Tom Lawry

Generative AI is a new and rapidly emerging form of artificial intelligence that has the potential to revolutionize precision medicine by improving diagnosis, treatment, and drug discovery. It’s comprised of Large Language Models and other intelligent systems that replicate a human’s ability to create text, images, music, video, computer code, and more.

So, naturally, when Damian Doherty, Editor-in-Chief of Inside Precision Medicine, approached me about writing an article on Generative AI, the first thing I did was ask the latest version of ChatGPT to provide a 2,800-word manuscript on the opportunities and issues of its application to precision medicine.

The content it generated was relevant, logically organized, and backed up with factual information. Sentence structures were precise and delivered in an easy-to-understand format. There was a formulaic beginning, middle, and end, with the correct provisos stated for being wrong.

The result was quite good, but in the end, it was a little too GPT-ish. There were many things my human brain wanted to know that it didn’t cover or guide me towards.

In some ways, this exercise mirrors the deeper discussions and explorations that are just getting underway to both understand our new and evolving AI capabilities and define a logical pathway to help clinicians and researchers make the practice of medicine more precise.

I’ve had the benefit of working with the application of AI in health and medicine for over a decade. Here are my very human thoughts on what should be considered as we approach this opportunity.

An AI taxonomy

Generative AI is a relatively new form of AI that has been released into the wild. As such, there are very few experts. This means that we are all early in the journey of understanding what it is and how we apply it to do good.

The chart below provides a simple taxonomy to help differentiate generative AI from other forms of Predictive Analytics.

While there is a great deal of hype over generative AI, there is a growing body of evidence on the things it can do well with humans in the loop:

- Write clinical notes in standard formats such as SOAP (subjective, objective assessment and plan)

- Assign medical codes such as CPT and ICD-10

- Generate plausible and evidence-based hypotheses

- Interpret complex laboratory results

Going forward generative AI will provide benefits in many areas including:

Drug Discovery and Development: Assistance in the discovery of new drugs and their development by predicting molecular structures, simulating drug interactions, and identifying potential drug candidates more quickly and accurately. AI can identify existing drugs that could be repurposed for new therapeutic uses, potentially speeding up the drug development process and reducing costs.

Personalized Treatment Plans: Analyze large-scale patient data, including genetic information, medical records, and imaging data, to guide physicians in the creation of personalized treatment plans tailored to an individual’s unique genetic makeup and health profile.

Disease Diagnosis: Assistance in the early and accurate diagnosis of diseases by analyzing medical images, genomic data, and clinical records, helping healthcare professionals make more informed decisions.

Medicine has been here before—change is hard

Since medicine came out of the shadows and into the light as a data-driven, scientific discipline we’ve always aspired to be better. The reality is that change is hard. It requires us to think and act differently.

When cholera was raging through London in the 1850’s Dr. John Snow was initially rebuffed when he challenged the medical establishment by gathering and presenting data demonstrating that the root cause of cholera was polluted water rather than the prevailing view that it was caused by bad air. From this came the early stages of epidemiology.

In the 1970’s the introduction of endoscopy into surgical practice was met with resistance in the surgical community which saw little use for “key-hole” surgery as the prevailing view and practice was that large problems required large incisions. Today, the laparoscopic revolution is seen as one of the biggest breakthroughs in contemporary medical history.

Generative AI and Large Language Models are part of medicine’s next frontier. They are already challenging current practices across the spectrum of research, clinical trials, medical and nursing school curricula, and the front-line practice of medicine. It’s not a matter of whether it will affect what you do but rather how and when.

With the right dialogue and guidance from a diverse set of stakeholders, we will create a path forward that leverages the benefits of our evolving creations to improve health and medical practices while ensuring that appropriate guardrails are put in place to monitor and guide its use.

It’s not about going slow. It’s about getting things right

In some ways, the challenge of generative AI today is less about increased AI capabilities and more about the velocity of change it is driving.

Generative AI came screaming into mainstream consciousness in the fall of 2022. ChatGPT, a generative AI product from OpenAI, racked up 100 million users in two months. In the history of humans, there has never been a product that has seen such rapid adoption. Shortly after ChatGPT reached this milestone the next version of GPT was released with greatly increased capabilities.

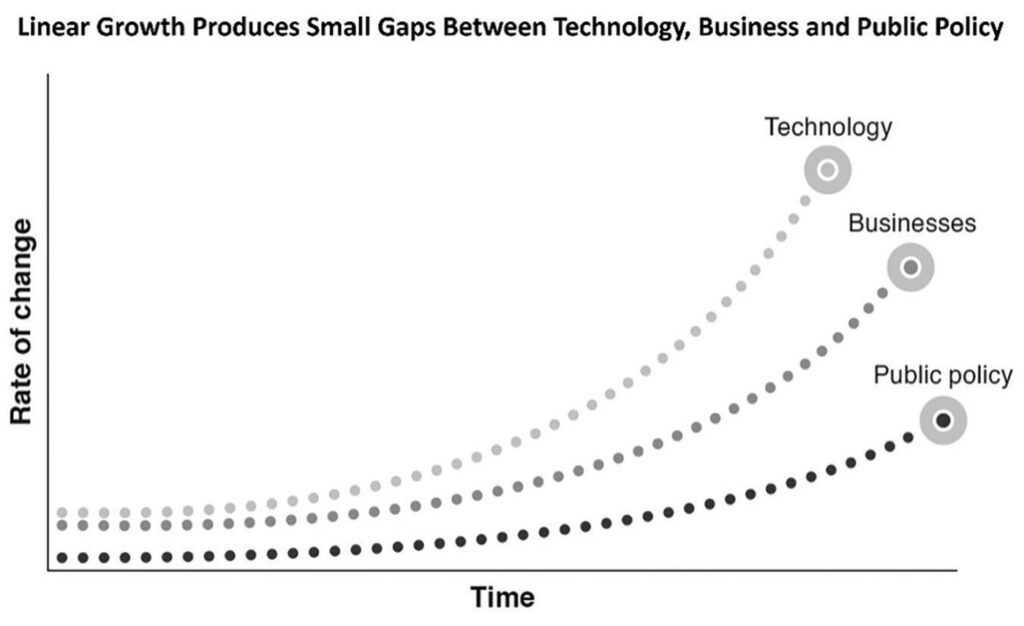

From the practice of medicine to the development of new drugs, generative AI’s “speed of progress” is not following the normal path that economists refer to as linear growth. This is where something new is created that adds incremental value, which then creates a small gap between the time of its creation and when it starts being used. As adoption occurs there is another small gap between uptake and the time it takes for policymakers to develop necessary guard rails to both guide its use and safeguard users from risks. Linear growth is steady and predictable and what clinical and operational systems are set up to manage.

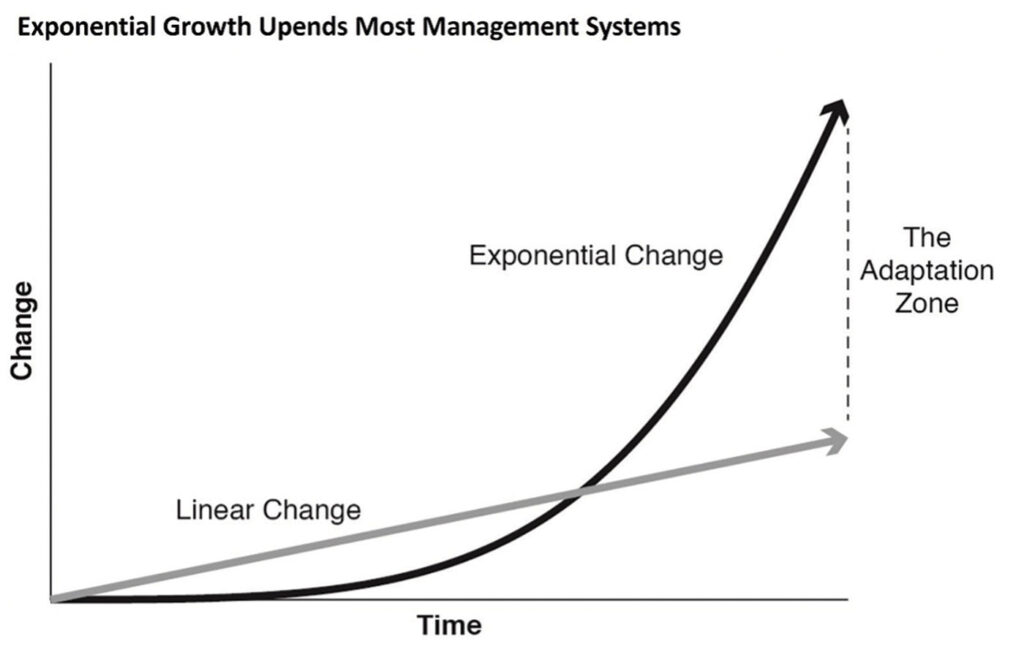

Generative AI is upending linear growth. It’s taking a different trajectory that economists call exponential growth. This is where something increases faster as it gets bigger. Most of our systems are not designed to accommodate this dramatic escalation in change. Exponential growth doesn’t last and eventually, the pace of change returns to linear growth. But when it’s happening it feels like the world is inside a tornado.

Earlier this year, the European Parliament approved landmark rules for AI, known as the EU AI Act. While the regulation is far from becoming law, it aims to bring generative AI tools under greater restrictions. This includes generative AI developers being required to submit these systems for review before releasing them commercially.

The rapid change driven by generative AI has some calling for measures to slow or even suspend AI development to evaluate its impact on humans and society. A petition from the Future of Life Institute was put forward and signed by leaders including Elon Musk calling for a six-month moratorium on AI development.

While there is uncertainty in what we are creating and how it should be applied, it is unlikely that any mandates will slow the pace of AI innovation.

While there is uncertainty in what we are creating and how it should be applied, it is unlikely that any mandates will slow the pace of AI innovation.

Instead of attempting to slow progress, let us expedite the education and dialogue among policymakers, medical and research leaders, and frontline practitioners to chart a course for progress in applying our new intelligent capabilities. These groups are also most relevant to ensuring that a necessary set of laws, regulations, and protocols are in place to safeguard those both providing and receiving health and medical services.

In keeping with this “gas and guardrails” approach to AI innovation there are two important areas to consider.

The creation of enforceable responsible AI principles

Let’s recognize and support the overall good that can come from AI innovation. At the same time, we must be mindful of how our ever-expanding AI capabilities can replicate and even amplify human biases and risks that work against the goal of improving the health and well-being of all citizens.

Prioritizing fairness and inclusion in AI systems is a socio-technical challenge. The speed of progress is spawning a new set of issues for governments and regulators. It’s also challenging us with new ethical considerations in the fields of medical and computer science. Ultimately the question is not only what AI can do, but rather, what AI should do.

While legislators and regulators work on finding common ground, health and medical organizations using AI today should have a defined set of Responsible AI principles in place to guide the development and use of intelligent solutions. Most often, these principles or guidelines are reviewed and approved at the highest level of leadership and incorporated into an organization’s overall approach to Data Governance.

A framework to manage decisions about decisions

Up until recently, all decisions in health and medicine were made by humans. As AI becomes pervasive in clinical processes it will increasingly make, aid, or impact thousands of granular decisions made each day.

Clinicians, researchers, and leaders must recognize how this impacts decision-making at all levels of an organization. Leaders will need to make a paradigm shift from “making decisions” to creating processes to guide making “decisions about decisions.”

One model gaining traction is put forward by Michael Ross, an executive fellow at London Business School, and James Taylor, author of Digital Decisioning: Using Decision Management to Deliver Business Impact from AI. The model creates a taxonomy well suited to the types of decisions made in providing health and medical services. Categories include:

Human in the loop (HITL): A human is assisted by a machine. In this model, the human is making the decision, and the machine provides only decision support or partial automation of some decisions or parts of decisions. This is often referred to as intelligence amplification (IA).

Human in the loop for exceptions (HITLFE): Most decisions are automated in this model, and humans only handle exceptions. For the exceptions, the system requires some judgment or input from the human before it can make the decision, though it is unlikely to ask the human to make the whole decision. Humans also control the logic to determine which exceptions are flagged for review.

Human on the loop (HOTL): Here, the machine is assisted by a human. The machine makes the micro-decisions, but the human reviews the decision outcomes and can adjust rules and parameters for future decisions. In a more advanced setup, the machine also recommends parameters or rule changes approved by a human.

Human Out of the Loop (HOOTL): The machine is monitored by the human in this model. The machine makes every decision, and the human intervenes only by setting new constraints and objectives. Improvement is also an automated closed loop. Adjustments, based on feedback from humans, are automated.

Over time AI-driven leaders will create frameworks and new management models that optimize the use of AI in making decisions while supporting clinicians who will remain in control of improved clinical processes and workflows.

AI capabilities will continue to change. How it adds value won’t

In working with health and medical organizations I’m often surprised how many times I see significant investments being made in AI without leaders being able to explain how AI investments will actually drive value at scale.

Using AI to transform anything requires a fundamental understanding of the capability differences between Artificial Intelligence (AI) and what I call Natural Human Intelligence (NHI). Once understood, this becomes your superpower. You now have the ability to pair the unique characteristics of each to drive measurable change.

AI allows us to leverage massive quantities of data and information to look for patterns that humans simply don’t have the ability or time to see. AI is good at finding things we care about, such as identifying patients at high risk of readmissions, falls, or unexpected deterioration. Generative AI is increasingly showing it can generate plausible and evidence-based hypotheses, and automate many activities such as clinical notes or assigning diagnostic codes.

By contrast, humans have the unique ability to draw on a set of skills and characteristics that no form of AI can mimic or replicate. As “smart” as AI is becoming, no one has figured out how to imbue machines with qualities that are essential in the world of health and medicine like wisdom, reasoning, judgement, imagination, critical thinking, experience, abstract concepts and common sense. Such attributes remain as uniquely human characteristics that are essential to making decisions in the provision of health and medical services.

The key to AI driving value comes when it is planned and applied in ways that augment the experiences and skills of clinicians, researchers and knowledge works to help them be better at what they do.

AI in medicine is not about technology. It’s about empowerment

AI has a PR problem. The narrative in the popular press and professional journals is often negative. Headlines like “Half of U.S. Jobs Could be Eliminate With AI,” paint a picture of a future work world dominated by what novelist Arthur C. Clarke calls robo-sapiens.

It’s no wonder that people are worried. According to a study by the American Psychological Association, the potential impacts that AI could have on the workplace and jobs is now one of the top issues impacting the mental health of workers.

Generative AI is already impacting today’s workplace and will be the single greatest change affecting the future of work in the next decade. It will impact how all work is done. As you let that statement sink in, recognize that the issues to be addressed go beyond productivity. After all, work brings shape and meaning to our lives and is not just about a job or income.

In this regard, there is growing evidence to suggest that AI can increase not only productivity but also job satisfaction.

In a randomized trial using generative AI, 453 college-educated professionals were given a series of writing tasks to complete. Half were given support with ChatGPT. The control group was not given access to ChatGPT. The results showed that the time taken to complete tasks was reduced by 40% among those using this form of generative AI. Beyond increased productivity, those using ChatGPT reported an increase in job satisfaction and a greater sense of optimism. Most importantly, inequality between workers decreased.

Done right, AI is not about technology. It’s about empowerment. Properly curated, generative AI will help solve one of the most significant challenges facing healthcare – The shortage of human capital. Here are two immediate opportunities in medicine today.

Keyboard liberation

Many physicians spend more time doing administrative work than they do with patients. One-third of doctors spend 20 or more hours per week doing paperwork. The electronic health record (EHR) creates a virtual 24/7 work environment for physicians. The impact of such “desktop medicine” on their wellness is a challenge for clinicians and organizations alike.

The promise of AI reducing highly repetitive, low-value activities is what Eric Topol MD, Founder and Director of the Scripps Research Institute, and best-selling author of Deep Medicine, refers to as “keyboard liberation.” A study by McKinsey & Company suggests that using AI and intelligent solutions in healthcare could reduce lower-value, repetitive activities performed by clinicians by 36%.

Reducing cognitive burden

A newly minted physician in 1950 would go their entire career (fifty years) before medical knowledge doubled. Today it is doubling roughly every 72 days. Without the use of AI to tame the data explosion, growing cognitive burden impacts quality, patient safety and physician burnout.

Today, 50,000 data points are in the typical EHR system that physicians must sift through each day. Primary care physicians spend more than an hour each day processing notifications.

Value from the exponential growth of data will only be realized by humans harnessing the power of AI.

The effective introduction and use of generative AI in health and medicine enables both cost-cutting automation of routine work and value-adding augmentation of human capabilities. As it and other forms of AI become pervasive in health and medicine, a new intelligent health system will emerge. It will facilitate systems that improve health while delivering greater value. It will provide a more personalized experience for consumers and patients. It will liberate clinicians and restore them to be the caregivers they want to be rather than the data entry clerks we’re turning them into by forcing them to use systems and processes conceived decades ago.

And while generative AI is coming at us fast with much to understand in how we use it, it could not have come at a better time.

References

- Peter Lee, Carey Goldberg, Isaac Kohane, The AI Revolution in Medicine: GPT-4 and Beyond, Pearson Education, 2023

- Theodore H. Tulchinsky, MD MPH, John Snow, Cholera, the Broad Street Pump; Waterborne Diseases Then and Now, Case Studies in Public Health, March 30, 2018

- Endoscopic surgery: the history, the pioneers. Litynski GS.World J Surg. 1999 Aug;23(8):745-53. doi: 10.1007/s002689900576.PMID: 10415199

- Ryan Browne, EU lawmakers pass landmark artificial intelligence regulation, CNBC, June 14, 2023.

- Pause Giant AI Experiments: An Open Letter, Future of Life Institute, March 22, 2023.

- Michael Ross and James Taylor, Managing AI Decision-Making Tools, Harvard Business Review, November 10, 2021.

- Ibid

- business.rchp.com/home-2/half-of-all-jobs-eliminated

- Arthur C. Clark, Britannica.

- Worries about artificial intelligence, surveillance at work may be connected to poor mental health, American Psychological Association, September 7, 2023.

- Shakked Noy, Whitney Zhang, Experimental evidence on the productivity effects of generative artificial intelligence, Science, July 13, 2023.

- The Medscape Physician Compensation Report, Medscape, 2021.

- Michael Chui, Where Machines Could Replace Humans-And where They Can’t, McKinsey & Company, 2017.

- Brenda Corish, Medical knowledge doubles every few months; how can clinicians keep up?, Elsevier, April 23, 2018.

- Information Overload: Why Physicians Are Inundated With Data (And How to Manage It), Spok.

- Jacqueline LaPointe, Study Supports Alarm Fatigue Concern with Physician EHR Use, HER Intelligence, March 16, 2016.

Generative AI: Technology that creates content—Including text, images, video, and computer code—by identifying patterns in large quantities of training data, and then creating new, original material that has similar characteristics.

Large Language Models: A type of neural network that learns skills—including generating prose, conducting conversations, and writing computer code—by analyzing vast amounts of text from across the internet. That basic function is to predict the next word in a sequence, but these models have also surprised experts by learning new abilities.